Welcome to the infomation page about Mixing Music

The fine art of mixing single audio tracks together as a whole is difficult, specially when you do not have some guidelines. First rule for explaining tthe name 'mixing' is that it stands for mixing it al up together, to make a whole overall sound. This means adjusting overal sound levels and making use of Fader Levels, Panning, EQ, Compression, Reverb, Delay or any kind of effect towards a good balanced track. Several issues come up while mixing, technique and equipment. Also offcourse like in composing, improvisation and goofing around might help you more to understand the difficult task to mix. Important is that the overal mix should be sounding tight and together as one. This Mixing page will try to explain some things about mixing, where to start and how to finish the mixing stage with good results. Remeber that time and understading is the way to go, knowing how to mix is a good thing before starting one. Take a good look around and read the information you find on our mixing information page.

Basic Mixing

Mixing or Mix.

Mixing is not only an art by itself as music is, it is called mixing because the word means just what it is about. Mixing or making a Mix is adjusting all different instruments or individual tracks to sound well together, composition wise and mix wise. How to start mixing a mix is a simple task when you understand what to do and what not. Later on we will also discuss the static mix and dynamic mix. According to some common rules, the Basic Mixing chapters explain common mixing standards as well being informational about sound subjects.

The Starter Mix, Static Mix and Dynamic Mix.

As of a process being broken down into parts, we can divide mixing into three basic steps. When starting a mix, mostly you will have some previously recorded tracks you need to mix furthermore. We will explain to setup all tracks fast, so you can have a default setup and progress to the static mix. Mostly the starter mix can be setup in less than 1 hour of working time. The static mix takes a bit longer, about 4 hours or so. The Dynamic mix and finishing up a mix can take from 4 to 12 hours of working time. Finishing off the mix can take 1 o2 two days or more depending on creativity, style and experience. It is good to know the total working time in hours finishing a mix, can be divided into three parts. First the Starter Mix. Then the Static Mix. Then the Dynamic Mix. Starter, Static and Dynamic mix are the basic three standard parts. Then finishing off. At last part 4 should be just working until the mix is finished. Before we discuss these subjects, we will start off with some more sound or audio details.

Overall Loudness while mixing.

The first mistake might be in thinking that how loud this mix will sound is important; a lot of beginners who start with mixing will actually try to get their mix as loud as they can get it to be. They try to push-up all faders until they get a desired overall loudness level, don't do that. The master vu-meter does look attractive when it is showing all green and red lights, you might get confused into thinking that louder is better. Louder is not meaning better when mixing, as we are in the mixing stage loudness is less important as this is part of the mastering stage. In the mixing stage we try to have a balance in the three dimensions of mixing, therefore creating separation and togetherness (at the same time). Though separation and togetherness might seem contradicting, every instrument needs to have a place on the stage, together they sound as a mix. So mixing is more about balancing (adjusting) single tracks to sound well. By a general rule on digital systems we do not like to pass 0 dB on the master track. Keeping a nice gap between 0 dB and -6 dB can help your mix well without distortion going on. Some like to place a limiter on the master track and so try to mix louder, maybe it works for them but we do not recommend doing this until you are experienced with a common dry mix under 0 dB. Anyway if you need your mix to be louder, just raise the volume of your speakers instead. That is a normal way of doing it. We will explain later on what to do with the master track of your mixer. Also when mixing do not place anything other on the master fader, so no plugins, reverb, maximizers etc. Just maybe a Brickwall limiter on the master fader with a threshold -0.3 db, or reducing just 1 or 2 dB only when peaks occur. For real beginner and not so experienced, we recommend nothing on the master fader and set to 0 dB.

Human Hearing and Speakers / Monitors

Everything you hear in the real world is basically mono sound and lots of ambiance and reverbs. The mouth that sings is mono, the world is ambiance reverbs of that mono sound.

Even a car passingby or birgds singing are mono sounds in the real world. In stereo or on stereo speaker systems the ideaal real world sound is not replicated. It is technics against the real world. So how do we recreate a good mix as humanly possible ? Well we try to think in human hearing in the real world and the disadvantages the common speaker systems have. Also a stereo system can create ambiance and reverbs etc, with plugins you mix in like reverbs and delays, etc. Anything like what is possible works. What works best is having lower frequencies in the middle and higher frequencies more panned outwards on left and right, or even discribute them across the stereo field with intent to place higher frequency sound mor outwards and low frequency more inwards. Stage thinking. But the stereo speakers or headphones do have many disadvantages as to get the human hearing into an amusant pleasurable experience.

Speakers come in all shapes and sizes, enabling you to listen to music on your iPod, enjoy a film at the cinema or hear a friend’s voice over the phone. In order to translate an electrical signal into an audible sound, speakers contain an electromagnet: a metal coil which creates a magnetic field when an electric current flows through it. This coil behaves much like a normal (permanent) magnet, with one particularly handy property: reversing the direction of the current in the coil flips the poles of the magnet. Inside a speaker, an electromagnet is placed in front of a permanent magnet. The permanent magnet is fixed firmly into position whereas the electromagnet is mobile. As pulses of electricity pass through the coil of the electromagnet, the direction of its magnetic field is rapidly changed. This means that it is in turn attracted to and repelled from the permanent magnet, vibrating back and forth. The electromagnet is attached to a cone made of a flexible material such as paper or plastic which amplifies these vibrations, pumping sound waves into the surrounding air and towards your ears.

Inside a speaker:

A. Cone

B. Permanent magnet

C. Electromagnet (coil)

The frequency of the vibrations governs the pitch of the sound produced, and their amplitude affects the volume – turn your stereo up high enough and you might even be able to see the diaphragm covering the cone move.

To reproduce all the different frequencies of sound in a piece of music faithfully, top quality speakers typically use different sized cones dedicated to high, medium and low frequencies.

A microphone uses the same mechanism as a speaker in reverse to convert sound into an electrical signal. In fact, you can even use a pair of headphones as a microphone!

Thomas Edison is credited with creating the first device for recording and playing back sounds in 1877. His approach used a very simple mechanism to store an analog wave mechanically. In Edison's original phonograph, a diaphragm directly controlled a needle, and the needle scratched an analog signal onto a tinfoil cylinder. What is it that the needle in Edison's phonograph is scratching onto the tin cylinder? It is an analog wave representing the vibrations created by your voice. For example, here is a graph showing the analog wave created by saying the word "hello". When CDs were first introduced in the early 1980s, their single purpose in life was to hold music in a digital format. In order to understand how a CD works, you need to first understand how digital recording and playback works and the difference between analog and digital technologies.

Audio Sampling

In signal processing, sampling is the reduction of a continuous signal to a discrete signal. A common example is the conversion of a sound wave (a continuous signal) to a sequence of samples (a discrete-time signal). A sample is a value or set of values at a point in time and/or space. A sampler is a subsystem or operation that extracts samples from a continuous signal. A theoretical ideal sampler produces samples equivalent to the instantaneous value of the continuous signal at the desired points. Digital audio uses pulse-code modulation and digital signals for sound reproduction. This includes analog-to-digital conversion (ADC), digital-to-analog conversion (DAC), storage, and transmission. In effect, the system commonly referred to as digital is in fact a discrete-time, discrete-level analog of a previous electrical analog. While modern systems can be quite subtle in their methods, the primary usefulness of a digital system is the ability to store, retrieve and transmit signals without any loss of quality.

Digital Clipping

Clipping is a form of waveform distortion that occurs when an amplifier is overdriven and attempts to deliver an output voltage or current beyond its maximum capability. Driving an amplifier into clipping may cause it to output power in excess of its published ratings. In digital signal processing, clipping occurs when the signal is restricted by the range of a chosen representation. For example in a system using 16-bit signed integers, 32767 is the largest positive value that can be represented, and if during processing the amplitude of the signal is doubled, sample values of, for instance, 32000 should become 64000, but instead they are truncated to the maximum, 32767. Clipping is preferable to the alternative in digital systems—wrapping—which occurs if the digital hardware is allowed to "overflow", ignoring the most significant bits of the magnitude, and sometimes even the sign of the sample value, resulting in gross distortion of the signal.

Avoid Clipping while Recording and Mixing

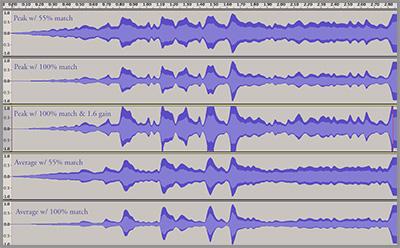

Keep your peaks below -3db - Keep peaks below -3db on your individual track peak meters, plug-in peak meters and on your master output peak meters. All meters lie, so don't trust them about clipping. Don't worry about the loudness, just turn your monitors up. Leave it to a good mastering studio to take care of the final loudness perception. Some meters are not peak meters and do not stop at 0. If you are using one of those meters, such as the K-System meters which may show 20, 14, or 12, then please take the time to understand K-System Meters.

Check for clipping between every plug-in - You have to make sure that clipping is not occuring between plug-ins. Plug-ins usually have meters where you can check the input and output levels. Sometimes a plug-in can raise the output and introduce clipping, but later in the signal chain, another plug-in will reduce the overall output, but the clipping is still there. So it's always good to make sure that clipping is not occurring between every plug-in in the signal chain.

Think of the flow - The signal flows through the signal chain. It starts with the digital recording and then it flows through any plug-ins and then through the master output. You must check that the peaks are below -3db at everywhere in this flow. That means taking charge of your signal flows by checking individual track meters, plug-in meters and master output meters before saying that the mix is final.

Volume or Level.

As the human ear can detect sounds with a very wide range of amplitudes, sound pressure is often measured as a level on a logarithmic decibel scale in dB. Commonly used are faders from a mixer or a single volume knob of any stereo audio system. Because volume is commonly known as level, beginning users might overlook the possibilities. The different volume faders of any mixer count up all levels towards the master fader as a mix. Summing up levels of tracks towards the master bus. When talking about sound or a note that has been played, the frequency and amplitude (level, volume) will allow our ears to record and our brains to understand it's information. You can guess playing at different frequencies and amplitudes, our hearing will react differently, allowing loud or soft sound to be understood. Allowing to perceive loud or soft, left, center or right, distance and environment. Our hearing is a wonderful natural device.

The Fletcher Muson chart shows different hearing amplitudes for frequencies at certain loudness levels. As you can see, how loud a note is played is affecting the frequency a bit. As well as with Frequency and Volume (amplitude, loudness), we can get a sense of direction and distance (depth). Our brains will always try to make sense as if sounds are naturally reproduced. Music or mixing is mostly unnatural (or less natural), but our brains understands music better when it is mixer for our natural hearing in a natural way. Mixing to affect our natural hearing by perceiving natural elements correctly (dry signal, reverberation, effects, summing towards the master bus). So as well for separating or togetherness, we can refer fist to the volume of a sound, instrument, track or mix that is playing. As well as Balance or Pan, Volume is an easily overlooked item of a mix. You might want to fiddle with effects more or keep it to more interesting things, volume is most important. Actually volume and pan (balance) are the first things that need to be set when starting a mix and throughout the mixing process. Not only fader, level and panning is important for a mix, composition wise volume or level is a first tool when you are using the mute button for instance.

Balance or Pan.

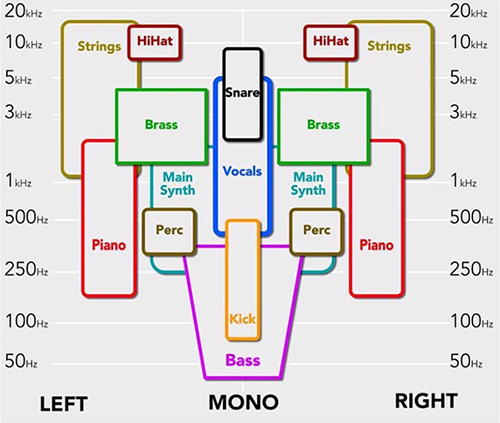

On a single speaker system (mono) where Frequency and Volume is applied, we would not have to worry about pan or balance, so all sound is coming from the center (mono). With a pair of speakers (stereo) it is possible to pan or balance from left, center to right. We call this left, center and right of the Panorama. So we are allowed to perceive some direction in the panorama from left to right. Just as effective to our hearing, the volume or level, panning or balance, is mostly overlooked by beginning users. What can be difficult about setting two knobs, fader and balance? Easy it sounds, but planning what you’re doing might avoid a muddy or fuzzy mix later on, keeping things natural to our hearing. Pan (Panorama) or Balance are both the same. As to where instruments are placed, Panorama is important it is the first sense of direction. By a common rule Volume Faders and Balance Knobs are the first things to do, and refer to, when setting up a mix. Beginning users who just setup Volume and Panning without a plan or understanding dimensional mixing are quite often lost and are struggling to finish off a completed mix.

Dimensional Mixing.

As a concept dimensional mixing has got something to do with 3D (three dimensional). You can understand that Frequency, Amplitude and Direction, make the listener understand (by hearing with our ears and understanding by brains) the 3D Spatial Information. When mixing a dry-signal towards a naturally understandable signal, we need some effects as well as some basic mixer settings to accomplish a natural perception. Setting the Pan to the left makes the listener believe the sound is coming from the left. Setting the Pan to center makes the listener believe the sound is coming from the center. Setting the Pan to the right makes the listener believe the sound is coming from the right. All very easy to understand. As we focus on frequency we can also do something about the way the listener will perceive depth. As sounds with a lot of trebles (higher frequencies) are perceived as close distance, and a more muddy sound (with lesser trebles) is perceived as more distanced (further backwards). Next our human brain can understand reverberation when for instance we clap our hands inside a room. The dry clap sound (transients) from our hands is heard accompanied by reverberation sound coming from the walls (early reflections). Reverberation, specially the delay between the dry clap and the first reverberations (reflections), will make our brains believe there is some distance and depth, as we hear first the transient original signal information of the clap then the reverberations. The more natural the more understandable. So there are quite some influences on what our hearing believes as being 3D Spatial Information. Make the listener believe in the mix as being true. Our hearing also likes natural and believable sounds, sometimes addressed as stage depth. With all controls of a mixer you can influence the way the 3d spatial information is transmitted to the listener. You can assume that Volume (Fader or Level), Panorama (Balance or Pan), Frequency (Fundamental Frequency Range) and Reverberation (Reverb or Delay) are tools you can use to make the listener understand the mix you’re trying to transmit. We will discuss dimensional mixing later on; now let's head to the frequency or frequency range of a sound. We perceive distance, direction, space ,etc, through clues such as volume, frequency, the difference in time it takes a sound to enter both ears (if it hits the left ear louder and quicker than the right) and reverberation.

The Frequency Spectrum.

A normal Frequency Spectrum is ranged from 0 Hz to 22000 Hz, actually all normal human hearing will fit in this range. Each of instruments will play in this frequency range, so the Spectrum will be filled with all sounds from instruments or tracks the mix is filled with. On a normal two-way speaker system these frequencies will be presented as Stereo. A speaker for Left hearing and a speaker for Right Hearing. So, on a stereo system there are two frequency spectrums played (Left Speaker and Right Speaker). Basically the sound coming from both Left and Right speakers together, makes up for the Stereo Frequency Spectrum as is presented below. Combined Left and Right (stereo), makes Centre (mono).

This chart is showing a commercial recording, finished song or mix. The x-axis shows the frequency range of the spectrum 0 Hz to 22 KHz. The Y-Axis is showing level in dB. On digital systems now days we go from 0 dB (loudest) downwards to about -100 db (soft or quit). In this chart (AAMS Analyzer Spectrum Display) you can see that the lower frequency range 1 KHz. The loudest levels are at about 64 Hz and -35 dB, while the softest levels are about -65 dB and range from 4 KHz to 22 KHz. The difference is 65 dB - 35 dB = 30 dB! As with every -10 dB of level reduction the sound volume for human hearing will halve (times 0.5). Instruments like bass or base drum (that have more lower frequencies in their range) are generating way more power (level) than the HI hat or higher frequency instruments. Even though we might perceive a HI hat clearly when listening, the HI hat by itself produces mainly higher frequencies and generates way less volume (amplitude, power, level) compared to a Basedrum or bass. This is the way our hearing is working naturally. But however a master Vu-meter of a mix will only display loudness, you’re actually watching the lower frequencies responding. The difference between lows and highs can be 3 times the sound level. From left to right mainly above > 120 Hz towards 22 KHz are the levels of frequencies all going downwards. Speakers will show more movement when playing lower frequencies and less movement when playing higher frequencies. This chart is taken from AAMS Auto Audio Mastering System, this software package is for mastering audio, but actually can show also spectrum and can give suggestions based on source and reference calculations for mixing. This can be handy to investigate sound of finished mixes or tracks, showing frequencies and levels.

Human Hearing.

Human hearing is perceptive and difficult to explain, it is logarithmic. As lower frequency range sound levels are measured louder. Higher frequencies measured as soft. They are both heard good (perceived naturally) at their own levels independent. Not only is human hearing good at understanding frequencies and perceives them logarithmical, also acoustics from rooms and reverberations play a great deal in understanding direction of sound. Generally a natural mix will be more understandable to the listener. We can expain all you hear in the real world is mono sound with the world reflections on in. In most cases many mono sounds on a busy day. Anyway the human hearing is two ears, stereo because you brains need direction of where the sound is comming from, what you hear is actually in the real world a mono sound source with reverberation on it. We use two ears and hear in stereo, in fact in nature everything you hear is actually in mono. Only technique gets mono to stereo sound in nature a stereo sound does not exist. A mono source can be directional because we hear with out two ears apart from ech other a slight difference betwoon the two ears, our brain will quess the difference of the two ears and gets direction information. Where is the mono sound placed ? Left or Right ? Middle ? Above ? Far or near ? That all depends on the reverberation added.

Tuning your Instruments

For all instruments including Drums, Bass, Guitar, Piano, Samples, Etc, so in just one wordt ALL Instruments, be sure they are in Tune. That means you can use a Tuner to tune your instruments. Even VSTi Synths or Sampling devices, tune them. One good thing can be using a Tuner to tune at 0 zero detuning, or you could use something like an auto tuner (like Antares Autotune with fast settings in the right chord settings or key setting). Once you get into tuning new or maybe older projects, you will notice that an In Tune Mix sound way better then out of tune Mix. Even for Drums you can tune each drum and the result will be a better Mix. Tuning is the most forgotten, but is very important. We have the 440 hz tuning but the 432 hz tuning is a discussion in it's own, besauce it seems 432 Hz is actually more human like frequencies or world real frequency based system. But anywahy 440 Hz is the standard and there is nothing wrong with it. As long as your instruments are tuned.

The Basic Frequency Rule.

The rule for mixing, that the bottom end or lower frequencies are important, because the bottom end or lower frequencies are taking so much headroom away and have the loudest effect on the Vu-Meters (dynamic level). The lower frequencies will fill up a mix and are the main portion to be looked after. The Vu-Meter is mainly showing you a feel of how the lowest fundamental frequencies are behaving. The Vu-Meter will respond more to lower frequencies and responds lesser to higher frequencies (3 times lesser). Mainly the mix fundamentals of loudness are ranging from 0 Hz to about 1 KHz; these will show good on a Vu-Meter. A range from 0 Hz to 4 KHz, will be shown by the VU-Meters as loudness, and is the range where you must pay attention to detail. If you can see the difference in loudness of a Basedrum and a HI hat you will understand that the HI hat (though can heard good) brings way less power than the Basedrum does. A beginners mistake would be mixing the Basedrum and bass loud and then try to add more instruments inside the mix, thus will give you limited headroom inside your mix (dynamic level). Most common to adjust frequency are EQ or Equalizers, but as we will learn later on, there are quite a bit more tools to adjust the frequency spectrum. As we did explain before, Volume (Amplitude), Panorama (Pan or Balance) and Frequency Range (EQ or Compression, limiter, gate) are the main components of mixing (dimensions). Before we add reverberation, we must get some mix that is dry and uses these components; we call this a starter mix.

Notes and Frequencies.

To make frequencies more understandable, you can imagine a single instrument playing all sorts of notes, melodies, in time on a timeline. To have some feeling where notes are placed in the frequency spectrum and how to range them, the chart below is showing a keyboard and some instruments and their range of notes (frequency range) they can normally play. All notes from C1 to C7 on a keyboard have their own main frequency. You can see Bass, Tuba, Piano, etc, in the lower range and Violin, Piccolo and again piano that can play high notes.

It is important to know about every instruments range, but as you go mixing it is better to know to give an instrument a place inside the available spectrum. The colored areas are the fundamental frequency ranges. It is likely when we need to do something about the quality of each instrument we will look inside their fundamental frequency range. It is likely when we boost or cut in these areas, we can do something about the instruments quality of playing. More interesting are the black areas of the chart above, these will represent the frequencies that are not fundamental. These frequencies are not fundamental frequencies and therefore when saving the mix for some headroom and get some clearness (separation), we are likely to cut heavily in these area's with EQ. Most of the hidden mix headroom is taken up in the first bass octave and the second octave (0 Hz - 120 Hz). Most notes played or sounds from instruments are notes that have a fundamental frequency below < 4 KHz. And when you really look at the fundamentals of a mix the frequencies 50 Hz to 500 Hz are really filling it, this is where almost any instrument will play its range and is much crowed therefore. The misery area between 120 Hz to 350 Hz is really crowded and is the second frequency range to look after (1st is 0 Hz - 120 Hz). The headroom required for the proper mixing of any frequency is inversed proportional to its audibility or overall level. The lower you go in frequency the more it costs hidden energy of the mix or headroom (dynamic level). This is why the first two frequency ranges need to be the most efficiently negotiated parts of any mix (the foundation of the house) and the part most often fiddled by the inexperienced. Decide what instruments will be inside this range and where they have their fundamental notes played. Keeping what is needed and deleting what is not needed (reduction) seems better than just making it all louder (boosting). To hear all instruments inside a mix, you need to separate, use Volume, Panorama, and its Frequency Range. You can get more clearness by cutting the higher frequencies out of the bass and play a piano on top that has cut lower frequencies. By this frequency rule, they do not affect each other and the mix will sound less muddy and more clear (separation). Both bass and piano have therefore funded their place inside the whole available frequency spectrum of a mix. You will hear them both together and clean sounding following the fundamental frequency range rules. Anyway for most playing instruments a nice frequency cut from 0 Hz upward to 120 Hz is not so uncommon, actually cutting lower frequencies is most common. Apart from Basedrum and Base that really need their information to be present, we are likely to save some headroom on all other instruments or tracks, by cutting some of its lower frequency range anywhere up to 120 Hz. The lower mid-range misery area between 120 and 350 Hz is the second pillar for the warmth in a song, but potential to be unpleasant went distributed unevenly. You should pay attention to this range, because almost all instruments will be present over here.

Fundamental Frequencies and their Harmonics.

Now as notes are played you expect their main frequency to sound each time. But also you will hear much more than just a main fundamental frequency. An instrument is sounding (playing notes), so there is a fundamental frequency range to be expected to sound, the frequency range of this particular instrument. Also recorded instruments like vocals contain reverb and delay from the room that has been recorded in and also quite a few instruments come with body, snare, string sounds as well (even those nasty popping sounds). The whole frequency range of an instrument is caused by its fundamental frequency and its harmonics and several other sounds. As we mix we like to talk in frequency ranges we can expect the instrument or track to be playing inside the frequency range (fundamental frequencies). Therefore we can expect what is important (the frequency range of the instrument or track) and what is less important (the frequencies that fall outside this range).

Harmonics.

The harmonic of a wave is a component frequency of the signal that is integer multiple of the fundamental frequency. For example f is the fundamental frequency; two times f is the first harmonic frequency. Three times f is the third harmonic and so on. The harmonics are all periodic to its fundamental frequency and also lower in level each time they progress.

f

Harmonics double in frequency, so the first harmonic range will be 440 times 2 = 880 Hz. Harmonics multiple very fast inside the whole frequency spectrum. You can expect the range 4 KHz to 8 KHz to be filled with harmonics. If you are looking for some sparkle, the 4 KHz to 8 KHz range is the place to be. Over > 8 KHz towards 16 KHz expect all fizzle and sizzle (air). The HI hat will sound in the range 8 KHz to 16 KHz and this is where the crispiness of your mix will reside. Also when the harmonics double in frequency, their amplitude or volume goes softer. The main fundamental sound will play loud, as de harmonics will decrease in amplitude each time.

Here are some instruments with their fundamental ranges and harmonic ranges.

In this chart you can see that the highest fundamental frequency (the violin) is 3136 Hz. So as a general rule you can say all fundamental frequencies somehow stop at < 4 KHz. For most instruments common notes are played in the lower frequency range < 1 KHz. You can also see that the lowest range of a bass drum < 50 Hz or bass is at about < 30 Hz. This means we have an area of frequencies from 0 Hz to 30 Hz that is normally not used by instruments playing; this area contains mostly rumble and pop noises, and therefore is unwanted. Cutting heavily with EQ in this area, can take the strain of unwanted power out of your mix, leaving more headroom and a clear mix as result (use the steepest cutoff filter you can find for cutting). Anyway try to think in ranges when creating a mix inside the whole frequency spectrum. Expect where to place instruments and what you can cut from them to make some headroom (space) for others. Need more punch? Search in the lower range of the instrument up to 1 KHz (4 KHz max). Need more crispiness? Search in the higher ranges of the instrument 4 KHz to 12 KHz, where the harmonics are situated. Expecting where things can be done in the spectrum, you can now decide how to EQ a mix or use some compression, gate, limiter and effects to correct. By cutting out what is not needed and keeping what is needed is starting a mix. Starting a mix would be getting a clean mix a as whole, before adding more into it. Effects like adding reverb or delay will be added later on (static mix), let’s first focus on what is recorded and getting that clean and sounding good.

Recorded Sound.

First and foremost, composition wise and recording wise, all instruments and tracks need to be recorded clean and clear. Use the best equipment when recording tracks. Even when playing with midi and instruments all recordings need to be clean, clear and crispy. The recorded sound is important, so recording the best as you can is a good thing. For mixing the recorded sound can be adjusted to what we like as pleasant hearing. So knowing where an instrument or track will fit in, will give you an idea what you can do to adjust it. Also giving an idea to record it. Getting some kind of mix where you hear each instrument play (separation) and still have some togetherness as a whole mix combines means also composition wise thinking and recording.

The Fundamental Mix and Swing

The volume of the master fader or the whole mix, is basically not important. So -16 dB or -6 dB levels on the master fader are ok, all dB mix is ok. So do not try to make the mix loud. Make the mix only at whatever volume level.

Next is the Swing, what that swing is depens on the role of the instruments. So how to decide that to adjust to each other ? The way you can do it and works prob. best is frequency wise form low to high.

So basedrum and bass are instruments that sit in the lower end. Carefully shape them first and adjust basedrum and base until you get so good sound and swing. Any placement in volume or panning or do some EQ and Compression.

Certainly on the EQ side expect quite some cutoff doing, a -48 dB or -96 dB cutoff is needed to adjust Low and High frequency cutsoffs. And expect cutoffs from Low even as high as 50 Hz. While people say they hear 5 Hz sounds or 25 Hz or so, that is true. But the cutoff filter for basedrum and base are sometimes needed and expect you mostly for in the mix you will cutoff a lot more then you initially will think wil do.

The swing is still you adjust LOW to HIGH frequency instruments, and the that from Low to High. So when you have a good sound on basedrum and base, mixwise and swingwise, and when they have a good feel together, then go on to the next instruments in line. That could be Guitar and Piano for instance. Try to combine both and mix and swing them (as you would do with baesdrum and base, listen both channels together until they feel good), so the Guitar and Piano need also a good mix and swing together. Then drop in the basedrum and base and listen all 4 tracks together. Hear what is wrong or right, and adjust the Basedrum, Base, Guitar and Piano until you get the mix back and swing back. Then pass on to like Vocals and Background vocals and reapet the procedure until you are at the end of mixtracks, instruments and vocals. Never adjust the Master fader keep that at 0 dB. Anyhow the lower frequencies are more important and the need a more centre pan approach, while go more up in frequencies the middle panning can be done more outwards left or right. So combinations like Guitar and Piano, almost need to be panned from each other more outwards. The higher frequencies can be center or left right panned, even hard panned. To understand that, the frequencies 0 - 250 - 666 Hz need to be level wise out the speaker on a left speaker and right speaker together to produce Low Frequencies good and correctly. Higher Frequencies are more easy to project trough left, right or centre and do not affect the speakers performance mostly (as low frequencies do). What is very important in technical side set aside. Get some swing into the mix anyhow by choosing Together instruments or tracks. From Low to High. Finishing the mix this way seems to me a very fast working order that works. This mixing is kind of longtime effort anyway. So mixing takes time, but mixing rules like i say here, are important not to get frustrated (why does my mix sound so bad ?). Anyway expect each instrument or track or vocal or audio to be EQ wise been cut hard at both Low and High end side. Sometimes a lot more cutting then you would even expect.

If some how the Highhats for instance are important for the swing of the music, expect to cut EQ Low or High (cutoff filter) for all other instruments to keep the highhats clear from highs comming from other instruments.

Expect that rule that Basedrum and Base go from centre, but need also clear pass. So other instruments are better paned left or right more outwards. And high sounding instruments can be panned maybe even more outwards.

Expect also to pan more then you think. So expect cutoffs more then you think and pan more than you think. Then hear if still instruments are muffed off by other. Try to make a pass for all instruments, human hearing wise that still bassy frequency instruments come more from centre speakers at the same sound, and left and right speakers with panning are more for mid and high frequency instruments. Expect more panning and more cutoffs! I mean i had the same problem i thought any eq cutoff is a loss of sound. That is true, but sometimes and most of the time, cutoffs can be more in power and frequency. So mix feel and swing are important. Swing is basically keeping the songs swing in tempo or instruments that interact need to keep their together swing and not be lost by bad EQ or Panning or Levels. Search for combination instruments and get some togetherness in mix and swing, adjust them both unitil that is done. Then more on to other combinations of instruments, Drums, Base, Guitar, Piano, Keys, Strings, Brass, Vocals, Etc. Understand ? This is important that you see frequency wise you need centre and left right to own the frequency domain and the way speakers work best. Do do not try to up the mix all the time with more power and more levels until max master is reached, keep some moderate levels in the greens. Think about volume, pan, EQ and maybe some compression. Those are your tools to setup for a good mix and frequency wise smart thinking centre is bass freq and highs are more panned outwards. Also the same works for Stage Mixing, that also uses the same system of low freq is centre and rest is more panned left or right.

Less is More

The way a mix is looked at is, less is more. Often the mix is filled to much so we need a lot more cutting then adding. Before we can use reverbs and delays, thing more of volume and pan, EQ and Crompession even limiting. The starter mix we discuss over here must first be setup and cleaned of unwanted sound. Also the use of mono sources like mono instruments is not a wrong thing at al. Because it will target more and be easyer to deal within a started mic. We just cut more then we bring in. Less is more.

The working place of audio

The equipment, how many plugins you have, what kind of expencive equipment you have, never be fooled by that. It is basic function like volume, pan, EQ and Compression that must be the first tought when mixing tracks. Also some have big discussions about their room sound, that is not needed as much, as long as you know what you do with a mix to get it right. Do not try to skip Volume, Panning and EQ must be the first thing on your mind and to work on that more till you get it, do not pass until you understand this. It is a mono source, volume, pan, EQ that must be handled first per recorded track or instrument. Tuning is a part of it. A starter mix should sound dry but good. Do not add thing like delay or reverb when you do not understand you first need to control the source. The discussion about your equipment is good enough or not is not done, think of what you can do with the current mix to make it all more clear and clean. Less is more. Also the discussion between using speakers or headphones is not an issue. Do as you like. As we do not use any reverbs now, but vol, pan and eq, on both systems you can hear it good. The room you play it in is not of such importance also (now).

Cutting / Removing is better than Adding / Gaining.

The work that can be done by a low cut on all instruments except Basedrum and Bass by EQ, is essential in getting the heavy bass sound out of all instruments including vocal, to let the Basedrum and Bass shine in their own low frequency range. Even cut out the Badedrum and Bass on the mids and higs can help clear your mix, so that all other instruments that are above the Basedrum and Bass frequencies get to shine also. Even when you do not have a Basedrum or Bass or both, rethink your mix tracks frequency wise. The instruments that really need the Low Frequencies keep it there, cut it out of the rest. Mostly a cut that at least covers 0 -> 120 Hz, but depending on the instrument try to cut out the lows as much as possible without really hurting the sound per track. The EQ part is eessential in mixing and beginners or even experienced mixers sometimes do not understand how much of a cut your need to do. Mustly this cut is way more then way less. The EQ understading is making rough cuts with a -48 db slope in the 0-666 Hz range for every instrument. Basedrum or Bass sometimes need a cut also in the 0 - 50 Hz range to make them work together. Anyway the instruments as Basedrum and Bass or (if you do not have them) do a mind mix scan and decide what instruments needs to keep their low frequencies and what do not need it. Starting with a low cut per instrument can clear up your mix so you can at least hear every instrument or track in the mix. Then the EQ ings is not over, then start per instrument to get a steady and natural sound. If you have vocals try to get them sounding at -9 db volume levels and if you have some kind of correct sound in your vocals, compare each track against the main vocals. So start off with playing only Basedrum and Bass, get them to work with each other can take some EQ work. But also compare Basedrum and Vocals, Bass and Vocals. Play each time a track and the vocals together and try to adjust the volume so that it sounds with the vocals volumewise correct. So each instruments needs to be compared against the vocals. If you do not have vocals, select the melody part or most dominant part of your songs, as instrument wise it will be the one you compare against. Vocals (main vocals) are mostly need to be heard all the time (else we do not know what text info they try to tell us listeners) so that is a good compare method. Offcourse your main vocals most sound good and be EQ wise correct beforehand. The main part of all of this is getting the frequency spectrum correct for each intrument or track with EQ. Specially cutting out lows when not needed (if they might interfeer with tracks that need lows) of all tracks.

The second EQ thing is determine that Highats or high signals like Drum Snare, Hi, House Hi, and anything that needs the high band must be maintained, while cutting out highs out of all other instruments and vocals, even FX or group tracks. So we need per instrument or track at least a low cut and also a high cut. And we try to compare method that Basedrum and Bass needs to sound correctly and with some kind of swing in it. Also we need the main vocals to stay clear all the time. EQ Wise is that you return to EQ even when you are longtime mixing your tracks, it does not matter. Just learn that Low Cuts and be quite heavy to do, and can be mind blowing, because if you think EQ vuts are not good for the sound, you might be wrong in thinking that. Rethink that Low Cuts can be quite hard to do, because you lose so much power of the sound, but that is really what is going on. EQ needs often be hard cuts, only to make room for all that is sounding out of all your instruments or tracks. EQ is the number one return to thingy in doing a mix correctly. So return to EQ and do not leave EQ to fast alone. Do not try to jump in the compression or FX bandwagon to soon. It might also blurr more your mix and you cannot even hear what most be done at all, you are stuck ? Read all above again ? Well mixing is mostly EQ, get a good long time into that before adding other things.

Often throwing in Reverb or Delay (too early) will taste up the sound of instruments and most beginners will start with adding these kinds of effects. Trying to make more sound that they like. Well just don't! You won’t have to add effects at first; you will have to decide what will stay and what must go. As well as setting up for some togetherness of all combined tracks, you will need some headroom for later freedom (creative things) to add into the mix. It is quite easy to fill your mix with mud; this can be done with adding a reverb or two. A new beginner with mixing will think they cram in sounds and instruments place effects and then be done. It is quite easy to make a booming sound by adding all kinds of effects or just pump up (boost) the EQ. Do not do that, try to stay away from adding when you mix is blasted on the whole out of proportion. To take away mud when you have already added it is a hell of a job. But EQ Wise can still be done with low and high cuts, low cuts are needed i explained that before. So starting with a nice clean mix that has all important sounds left over (without adding), is way better and gives less change for muddiness. Remember to do more cutting then boosting or gaining. Manual editing comes as a first task to decide what must be removed and what can stay. Leaving some headroom for furthermore mixing purposes. This is quite a task. In most cases EQ or Equalization can be used to do work with the frequency spectrum (range) as a whole. But on a DAW you can also delete what is not needed or mute it. You can decide to cut all lower frequencies out of a HI hat, just because you expect they are not useful. Leaving some frequency space (headroom) in the lower frequencies for other instruments to play. This kind of cutting (the HI hat) in the lower frequency range to leave some lower frequency space unaffected is the way to make every instrument have their own place inside the whole frequency spectrum or mix. Using Level (Fader), Balance, EQ and Compression (limiter and gating), these are good tools to start a basic mix setup. But a good start is meaning better results for later on, when your adding more to the mix to make it sound better and together. Starting with a clean mix is starting with a clean slate. With EQ for instance cutting/lowering can be done with a steep bell filter, raising can be done with a wider bell filter.

The Master Fader.

What not to do while mixing is adjusting the master fader each time you need to correct the overall level of your track, keep the master fader always at 0 dB (Only when you’re using the master fader to adjust the main volume of your monitor speakers, headphones or output to you listening system, it is allowed to adjust only that single master fader of your desk while mixing). This means that all other master faders (soundcard, recording program, sequencer, etc.) must be left in the same 0 dB position while mixing. Also this will go for the direct Master Fader of summing up the mix and Balance (Mater Pan), keep this always centered. The main reason is simple; the master fader is not for mixing, leave it alone. When you set the main master bus (summing) fader below 0 dB you are lowering the overall volume, this might seem plausible but especially with digital systems you will have problems not hearing distortion while you are pushing the instrument faders upwards. Also by lowering the master fader you will have less dynamic range, This means that internal mixing can be going over 0 dB (creating internal distortion) but it will not be visible or show on the VU-meter, will not light up the Limit Led, it will give you no warning that you’re going over 0 dB. When a signal goes over 0 dB on a digital system, there will be distortion of the signal going on (set your DAW for 32 bit float processing). But you will not notice any distortion going on when this happens internal. If you hear this or not, this is (mostly) not allowed. Try to keep all master faders and master balance in the same position when mixing, preferred at 0 dB. Also the human ear is hearing frequencies different at variable volume's (loudness). Listening while playing soft might reveal to your hearing in a certain way, when you raise the volume it will be slightly different to your hearing. So listening loud or soft, it is close but differs, by this it is always good when you like it loud, play your mix soft and see what happens to the sound (disappearing?). It is a good check to see if your mix will stand out as well played loud or softly. How the human hearing is responding is showed in this chart.

Low Band

To keep in mind speakers work best for low frequencies played in centre, so both speakers do the work simultaniously, there are plugins that do frequencies 0 - 250 - 666 Hz and make them mono. In tracks and to save processor time , a plugin that does this can be placed on the master fader. And help you to have centre speaker used in the low frequencies together in mono. While leaving higher frequencies above 666 Hz more outwards. The mone tunnel of bass frequencies they create works well to keep low frequency instruments in centre of both speakers all the time. And is a timesaver.

This chart shows different loudness levels, you can see that the frequency range between 250 Hz to 5 KHz is quite unaffected by playing loud or soft. But however the 20 Hz to 250 Hz is greatly different in loudness when played loud or soft. Also the higher frequencies transfer different when played loud or soft. This is the way human hearing perceives loudness.

A good starting point ?

Solo your lead vocal, mute all other channels. Set your lead vocal peak level at -9db. Set your Kick Drum peak level at -6db. Then Set your Snare Drum peak level at -7db. All other instruments peak levels may be set by taste.

Why does my mix sound so muddy ?

We go into this now because a lot of people are having problems with their sound when having to mix a set of instruments. Also recordings or vocals, the mix will get more muddy and unclear. The more sound you add, the more frequencies are taken from the open spectrum to use. So we should use the frequency spectrum from 5 Hz to 22,500 Hz wisely! When the lower frequencies are needed for Basedrum or Bass, do not let other instruments sound is these frequency spectrum area's. Specially the low bottom end must be clear from obstruction. Each sound extra will upset the bottom end more and more, and as bottom end eats up the dynamics, better be clear and wise. A good help is added when using a compressor to duck out sound from other sounding instruments. When the basedrum hits it can duck the sound of the base by using compression and side chaining. The use of this extends when you duck the other instruments execpt the main vocals. The main vocal will sound upfront suddenly. A combination of compressing the sound syou need to duck out, while others are playing is a good dynamic and spectrum wise tool to use inside a mix. Also ofcourse panning, delay or reverb, are good tools, but sometimes while hitting a good sound, the mix will not be clearly revealing all sounds as perfect as can be. Some mixes or tracks are so wisely mixed, they stand out. The use of a Plugin like Wavesfactory Trackspacer is a very good tool to duck out and is even an improvement above compression and side chaining. Basically it is an insert effect plugin that you use to reduce frequencies on one track in favor of another track. Trackspacer is an award winning plugin, so take a look at it. Some sounds are always fighting extra much with each other. Such as the kick drum vs the bass, or the leading melody vs the chords.

Well, simply slap on the Trackspacer on the two opposing tracks and get instant improvement in separation. Anyway i hope you understand that when you balance instruments that are Bottom frequency based in the middle and vocal in the middle, the rest of instruments can be panned. This will improve a mix a lot, just cutoff with an EQ all bottom end frequencies not needed by other instruments. You find out, you can do a lot off cutting on maybe a piano or strings, maybe even above 200 hz - 500 hz. And why not, it creates room for others to come true. So the main vocals are heard all the time it produces audio or sound, by just ducking all others by 0.707 or 30%. So when the vocal sounds, the strings and piano are ducked out for only 30 % of their volume. But when the vocals are not heard, the piano and strings play like normal. This EQ, Compression, Balancing, Panning and ducking are mixing techniques that need to be understand first and learned second. They are the tools to unmuddy your mix.

Bass Is The Foundation

Having a tight, punchy low end in your musical mix is a prerequisite. Regardless of whether you make electronic music, rock, pop, folk or any other style or genre. Get the low end component right and you have the perfect stable foundation for the rest of the production. Get it wrong and leaving it unrefined, you will find almost everything else you try will be something of an uphill struggle. If bass elements of a mix are coming through too muddy, boxy, or turn acoustically hidden invisible, you will struggle to finish the mix. Bass is the foundation towards getting a consistent powerful mix.

The mix there must not be a huge number of different elements whose primary frequency ranges are in the low frequencies. First keeping everything else out of the way Low Frequency Wise with filtering and EQ, making the Basedrum and Bass come trough. Then getting bass guitars or synth basslines and kick drums working together, supplying the low end groove and weight. The environment in which you mix or listen to your music has a huge bearing on the perceived level of the different frequencies. In small rooms it is the bass frequencies that are most affected by poor acoustics and short distances between surfaces because bass frequencies. This is because with their longer wavelengths than higher frequencies, bass sounds are much more prone to phase cancellation. However, when playing music very loud and over large systems, the apparent freuqency response will change.

First of all, consider that even decent home hi-fis don’t reproduce frequencies lower than 40Hz! And most domestic listening systems won’t do much below 80Hz! So begin your journey towards a good bass by making sure the bass sound is providing plenty of energy somewhere in the 70-100Hz range. This will ensure the fundamental bass frequency won’t be lost on the vast majority of playback systems. Where exactly the bass hits most will partly depend on where the kick drum sits as well, as you want the two working together.

Subharmonic Synths Generators work in a similar way to harmonic enhancers, but here you’re adding lower frequency harmonics rather than higher. This can be used to add extra weight and sub-bass frequencies that just weren’t present in the original sound. Some producers also use pitch shifters at this stage for similar effect, pitching a copy of the bass part (and often kick drum too) down by an octave and mixing this with the original. In a sense, this works in the same way as layering different components. It is generally accepted that the main bass and kick drum parts should always be kept panned to the centre, for a couple of reasons. First is that this shares the high level bass energy equally between the two stereo speakers, so you maintain maximum impact overall. Another reason is that it maximizes the chances that listeners will always be able to hear the bass properly. For example, you’ll want to keep sub-bass and any deep layers central. But any mid or higher frequency elements of the bass sound, some fizzy distortion or filter swooshes can be more widened.

The Basedrum and Base

I find in most cases the low end freqncies 5 Hz tot 120 Hz are the most important to get a closed look at. Dependant on what you are working on and with. Using speakers or headphones, be adjusted with your gear to listen commercial music and other mans music. Be convinced that the amount of base and low frequencies is not overendulgend, so compare and know your equipment. Specially the 5 Hz - 50 hz range can be forgotten, but is most important to have at least not too much frequencies in this range. Why ? Because it will lift and sweep all other frequencies with it. So some balance and level here is where you can get you music to sound really good. And the main problem for us humans is our hearing will not be so great in these ranges. So maybe you need to see and hear. Anyway if your using headphones be a long time working with your headphones listening to all kinds of music. So you know how they sound and what the low end will sound like. It is very easy to overdue the low end and that will hurt the rest of your mix. Some apply steep cuts below 30 Hz, the best thing is to cut here but know how much. If the low end is good and steady correct, it will lift your mix to be commercial.

Instruments.

Everything that you record on a track is likely to be an instrument. Common instruments are Drums, Bass, Guitar, Keyboard, Percussion, Vocals, etc. So when talking about instruments we do mean the full range of available instruments or sounds that are placed each on their own single track. When you mix, you only adjust the instrument faders to adjust the volumes (levels) of the different instruments or single recorded tracks (don't touch that master fader). Hopefully you have recorded every instrument separately like Drums, Bass, Guitar, Keyboard, Vocals, etc. On single tracks and on your mixer they are labeled from left to right. Each fader will adjust volume (or the level) of a single instrument or track, as a total summed up by the master bus fader. It would be wise to start with Drums on the first fader and then Bass. The rest of the faders can be Guitar, Keyboard, Vocals, etc, whatever instruments you have recorded.

Separation and Planning, Labeling and placement on a mixer.

Most likely you will start with the Base drum on fader 1 and working upwards with Snare, Claps, HI hat, Toms, Etc, each on their own fader 2,3,4,5,6,etc. So the whole Drums are sitting on the first faders. Then place the Bass, Guitar, Piano, Keyboard, Organ, Brass, Strings, Background Vocals, Vocals, Etc. on the next faders. You can use any kind of system. If you have some Send Tracks, place them far right on the mixer, just next to the master fader. Be sure to label all tracks and set the fader at 0 dB and Pan at Centre for each mixer track. To Label names and tracks (instruments) of a mixer is keeping it visible. Most digital sequencers allow this naming of a track on a mixer. Also it is good to work from the loudest instruments (Drums, Bass, Etc) towards softer instruments. Plan this on your mixer from left to right, faders 1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,etc. Most likely the Basedrum will be the loudest peaking sound, place it first on the right. Maybe you have no drums on your tracks, just work out what sounds would be mixed and heard the loudest and what would be softer heard.

Making things easier for you to understand, we use labeling the Drums as an example.

Keeping things separated when recording drums is a must. You can do more on drum mixing when Basedrum, Snare, Claps, HI hats, Toms, etc are, each recorded on their own track (separately). This will mean that you are using more tracks on the mixer, but are rewarded by flexibility in mixing. Now days with digital recording, sequencing and sampling instruments, the drums often come from a sampling device, drum synth or recorded with multiple microphone setups. As long as your recording technique allows you to separate tracks or instruments, you will profit from this while mixing. Also for sampled instruments or synthesizers that can output at several multi tracks, it can be rewarding to separate each sound, giving each a single track on the mixer. Again, spreading and separation works best and is most common mixing technique. Deep sounds spread all across the panorama is not a good thing, depending on fundamental instruments (bass drum, snare, bass, main vocals) must have a center placement. Any variation off-center will be noticeable. Follow the panning laws for fundamental and not fundamental instruments, fundamental lower frequencies are centered and higher frequencies more outwards, lower not fundamental instruments more towards center, higher instruments more outwards. Use a goniometer, correlation meter. Working on Daws (digital audio workstations) keep goniometer, correlation meter, level meters and spectrum available as constant checking tools. Maybe even place a second monitor or even another computer to do this job.

Sound Systems.

As with many questions about sound systems, there is no one right answer. A well designed mono system will satisfy more people than a poorly designed or implemented two channel sound system. The important thing to keep in mind is that the best loudspeaker design for any facility is the one that will work effectively within the, programmatic, architectural and acoustical constraints of the room, and that means (to paraphrase the Rolling Stones) "You can't always get the system that you want, but you find some times that you get the system that you need." If the facility design (or budget) won't support an effective stereo playback or reinforcement system, then it is important that the sound system be designed to be as effective as possible. Preferred is a room with no acoustics for recording. For monitoring a room with some acoustics (room reverberation). Quality is an assurance, but however when on a budget at least choose equipment with less or no noise (background noise).

Mono or Stereo.

Well this question is asked and debated. But for me and many others I like all tracks to be stereo. So I do not like to record in mono at al. But we can refer to fundamental instruments (Basedrum, Snare and Vocals) as panned straight in center and be upfront. So these can be recorded or have converted original signal in mono, this will assure the left speaker and right speaker play both exactly equal and make them appear straight in center where they should be. Most of times I will convert mono tracks to stereo (left right the same) or just record in stereo even when it's a mono signal. So it's no mono for me, but this can be debated. Although of off course I respect the fundamental instruments are straight centered all the time. Specially using a computer or digital systems and recording sequencing software, working in stereo all time will allow you to have all effects in stereo and channels in stereo. Most digital mixer and effects like delay, reverb, phaser, flanger, etc are working in stereo and need to sound in stereo anyway. When playing a mono signal some digital systems will not perform that well, so it is stereo that is creating lesser problems with digital systems. Off course working in complete mono will reduce correlation problems, we mix in stereo with 2 speakers. It is better to have all tracks in stereo even when a recorded bass or guitar is actually recorded in mono. I always convert from mono to stereo or start by recording in stereo, this is just an advice. As long as the original signal is exactly the same left and right, you can work with mono signal in stereo mode. Knowing your tracks are all in stereo, you would not have to worry anymore about mono or stereo tracks at all (and to worry an effect or plugin is not outputting that well). You just know its Stereo all-time! This can help for setting up and making things easy. A well-recorded mono sound source on the other hand (recorded mono or stereo both channels), can be placed with relative ease onto the sound-stage allowing you to much better handle what and how any effects should be applied with regard to your other neighboring instruments, and their positions and frequencies in the mix. Stereo sounds that sway around the panorama alike synths, can be hard to handle. Especially when you have a bunch of these swaying instruments inside your mix. In natural world, it is likely that a dry signal is transmitted as mono, but with reverberation added and perceived as stereo by both our ears. Also in steady mixing, mono signals work best, even when they are filling up a stereo track both channels playing the same amount of sound gives a more steady and natural mix. Remember you can always add an effect to make instruments sway around. So recording a dry and clean signal is rewarded when later mixing purposes have to be free and creative. If two mono sound parts are sharing the same frequency range then just try and simply pan them slightly one to the right, other to the left. A couple of notches either side is usually enough. You must record in stereo, use two mono channels to capture right and left respectively as mono or as stereo. Test your mix in mono mode as well as in stereo mode. Use the mono button on the mixing desk to sum the channels together into one mono channel. This will put all the sounds into the center. Listen for phasing or any sounds that might disappear, so you can correct them. Use a correlation meter, goniometer, spectrum analyzer and level meter on the master bus to have checking tools available when needed.

Mixing in Mono or Stereo ?

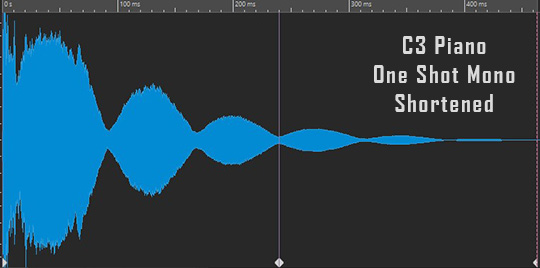

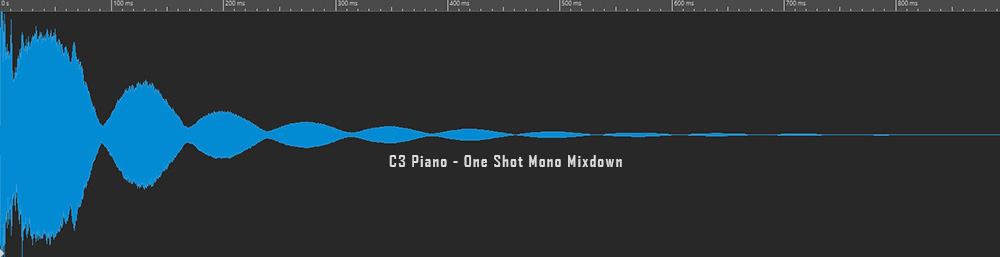

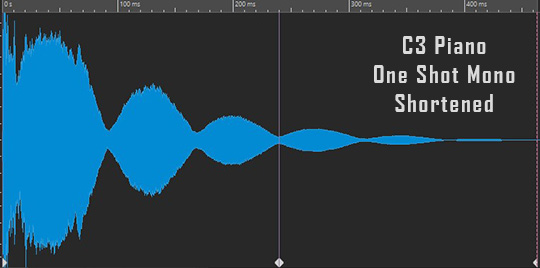

I was always using a sampler as a main instrument. Recording samples in stereo and put them across the keys of the sampler. Using VST Instruments in Stereo, because I always thought stereo was it. But in the early days of tracker software (like Renoise), I used One Shot Mono Samples as an instrument. Like a Piano on key C3 as a One Shot Sample. Now with sequencers like Cubase and lots of VST Instruments and Sampling like Steinberg Halion or Steinberg Groove Agent, you get lots of samples and many of them are in stereo. The samples have a long tail, because they are captured with the complete sound and reverberation. Anyway it started to be processor heavy and was a lot to handle sample wise. Also the instruments have longer tails and it seemed that it would overcrowd the mix.

Using One Shot Mono Samples

The human hearing or nature sounds are basically all mono sound sources, there is nothing in the real world that comes as stereo sound, the real world produces mono sounds only. Like when you drop your keys on the floor, that is a mono sound. Actually the keys dropping on the floor is a mono sound with a reverberation tail (depending where you are, in a room or outside). So you could say the sound of dropping the keys on the floor is very short and in mono, the way that short sound is reverberated is also mono, but can come from many directions. We hear with two ears in stereo, that is because we need to know the direction where the sound comes from.

If we keep it short, we only use the keys falling on the floor part, maybe be come up by recording it as a sample with only 2 seconds of recording this sample. And maybe we can cut it more, we just need the dry beginning hit on the floor, we cut the reverb tail. So we have then a One Shot Sample in Mono that is very short in length < 1,5 second.

The same goes for recording a piano as a sample. Most will think we need many samples with long tails for recreating the piano in a sampler. That is why most piano library’s or piano instruments in the digital domain have lots of samples! But do we really need that ? Most of you will believe that it is all needed, big samples, lots of samples, is more quality, not ?

The profit when working with Mono Instruments

For a piano I now days just sample like only the Key C3 and have it as short as can be as a sample. I put that in the Sampler or Sampler Track of Cubase, and that is my piano sound. Many would say that the piano sample will not represent the original piano. And that will be true. But however in the real world everything is mono. So if I play the One Shot Mono Piano Sample on a keyboard it will sound off course mono. The catch is you can easily pan the Mono Piano and it will stay in the pan. A stereo piano might float around the stereo field. A mono piano will stay where I have panned it. So that is way more easy. Then if I use a send on the mono piano, like a stereo reverb behind the mono piano, I will recreate reverberation like in the real world!

The profit is when you use all instruments like Drums, Bass, Guitar, Piano, Strings, Melody, Vocals, etc., as Mono Short One Shot Samples that contain only the hard sound and not the tails, you can use your own chosen reverb(s) behind it. That works a lot better, recreating a human hearing sound in your mix! So from a single One Shot Sample in Mono, there can be created a Natural Human Hearing in the mix with using own chosen delays or reverbs. Not having long tails in the samples that contain already reverberation that was recorded with the samples, means you do not have all kinds of reverberation in your mix. So therefore using Short Mono One Shot Samples is a legitimate option! By first creating a dry mix with One Shot Samples with a short tail, and later on adding your on effects (like reverb or delays) you can control the mix in a better way.

Your mix will not be so overcrowded

Using One Shot Mono Samples, and especially Short Samples that only contain the main sound and not the reverb tails, will make your mix snappy, light and controllable. Losing all that pre-recorded reverb tails on all the instruments will clarify your mix and make it snappy. You can adjust the dry mix way better and hear everything way better. Then at a later stage you can use your own reverberation!

So long samples with a reverb tail recorded into the samples seems ok, but it gets confusing for the human ear when all kinds of different pre-recorded instruments are used, and they all have different reverberations recorded! The different sounding reverb tails, will confuse the human ear. That will crowd your mix and will be un-steerable and confusing to mix to a good result.

Short One Shot Samples in Mono, will clear the problem of having all kinds of reverberation that is unknown to you (because the reverb tails are not there), will not confuse the human ear (a straight natural mono sound like in nature is used), will not crowd your mix and will be steerable and way easier to mix! So think like in nature and human hearing. A sound will come in nature as mono, it picks op reverberations from the room of environment, and we as humans catch that with our two ears. The part of reverberation that has been lost (by cutting all samples short), can be compensated by using you own reverberation inside your mix. That can be a way more clear way to get a good dry mix first, then put on effects that you need. For me that is a way more easy way of working and getting a mix to sound good. Working first with sampled instruments in mono one shot samples, and then recreate the reverberation with reverbs and delays inside your mix effects sections, will give a more human hearing natural sound, as it would be in the real world.

The second is working with just One Sample per Instrument, makes it a lot easier to work with the samples in an audio editor. If you have a Piano the comes from a Library, like a Steinway Piano, this can have lots of samples (maybe even hundreds of samples). We cannot edit or control these samples, that would be a lot of work. Just One Sample per Instrument can make you Autotune it easy! The C3 Piano One Shot Sample, can be cut to size easy, can be tuned easy, and will be played in tune across the whole keyboard. And it can be controller inside the sampler with ADSR real easy. So why work with hundreds of samples that are uncontrollable and you do have across all instruments different unknown reverb tails ? That is confusing the human ears and confuse your mix. Instead working in Mono One Shot Short Tail Samples per Instrument, can make you a dry mix that you can adjust way better, is easy on the hearing, and is a more natural beginning of a mix. You can always recreate you own reverberation inside your mix as effects.

The way of working is, all instruments have One Shot Short Tail Mono Samples, you can pan them across the stereo field, add reverberation later on inside the mix, and control the samples more easy in an audio editor! It works better when all sounds are reverberated the same way, as a human we like to hear all sound from one room, one live concert, one environment. Instead of working with all kinds of library sounds that are stereo and have all different origins in reverberation, start and try working with One Shot Mono Samples!

Creating from Stereo Sample a Mono One Shot Short Sample

Get one stereo sample, example C3 key sample of a piano sample library. Or record the key C3 from any source instrument. Cut the empty beginning and end, make the sample smaller, with good start point and ending.

Create a Mono Mixdown, most audio editors can this.

Shorten the end by listening to what part you really need, and what part at the and can be cut off without real loss. Fade out the end part of the sample. You now have a C3 One Shot Piano, that can be very usefull in a sampler.

Human hearing

Generally a natural mix will be more understandable to the listener, when every sound is actually broadcasted in mono. We use in a mix stereo signals, that is true. We can expain all you hear in the real world is mono sound with the world reflections on it. The human hearing is two ears, stereo because you brains need direction of where the sound is comming from, what you hear is actually in the real world a mono sound source with reverberation on it. We use two ears and hear in stereo, in fact in nature everything you hear is actually in mono. Only technique gets mono to stereo sound in nature a stereo sound does not exist. A mono source can be directional because we hear with out two ears apart from ech other a slight difference betwoon the two ears, our brain will quess the difference of the two ears and gets direction information. Where is the mono sound placed ? Left or Right ? Middle ? Above ? Far or near ? That all depends on the reverberation added! So we could do with only mono instruments in a mix, and in the same mix add our own reverberation on top! When we first get rid of all recorded reverberation, in fact use Short Mono One Shot Samples, we have the sound as it would be done in nature without the reverberation on it, a dry short sample. The world around us creates the reverberation on each sound, but when we let the reverberation stay inside our recorded sample, we can have a lot of different reverberations allready inside our mix. That is maybe why you struggle to get the reverberation correct inside your mix ? Why not use only teh One Shot Mono Samples for each instrument. We the have a good dry mix to start with. And can add one kind of reverberation on the mix (by using reverb,delay,etc) so your mix will reside in one reverberation. A human likes to hear the sound is as in nature. You are standing in a room, if you play music there, you will get the reverberation from the room, when you also start talking there you also get the same reverberation. Because in nature where you are, you get that kind of reverbeartion on all that is sounding. In a mix we can use Onse Shot Mono Samples, and mix the reverberation in or onto it.

Basic Mixing.

This is going to be hard to explain, but an example will help you get started mixing. For example you have recorded a Pop, Rock, House or Ballad song. And now you have finished recording it (composition wise and recording wise in audio or midi), you will need to mix to make it sound better and more together. At first separation is needed, cleaning and clearing (single tracks). Getting the mix as dry as can be. You can choose to use Stereo Instruments or Mono Instruments or even One Shot Mono Intruments as explained below. When you are mixing for a long time on Stereo and you cannot seem to get the mix correct ? Try aiming for a Ono Shot Mono Instrument mix on stereo lanes. Then you can add the effects needed for reverberation (room and direction) later on. You choose a complete stereo mix, or get into Mono Instrument Samples on a Stereo Mix. Second quality and togetherness of a mix is what your aiming for, mixing it up (groups towards the master bus, summing up). What you’re not aiming for is loudness or level, how loud your mix is sounding is of lesser importance then having your mix sound well together. Togetherness is what you’re aiming for. So watching the VU-meter go to maximal levels is not so important while mixing, pushing all faders upwards all the time will get you nowhere. So forget how loud your mix is sounding, this is called Mastering and is a whole different subject. Mastering comes after you have finished mixing. Mixing is what you’re looking and that is why it is called mixing, for this means , cleaning, cutting, separation as well as togetherness.

Mixing steps.

We have three sections to fulfill while mixing from beginning to end. First the Starter Mix, where we setup a mix and start off working inside dimensions 1 and 2. Then the Static Mix, where we apply dimension 1, 2 and introduce dimension 3 as a final 3d dimensional mixing stage plan. Finishing off to this part Starter and Static mix is giving a basic reference static mix for later use, and needs to be worked on until the static mix stands as a house stands on its foundation. Then finally the Dynamic Mix, where we introduce automated or time lined events. Make progress in mixing, plan on finishing your projects within a predetermined period of time. This is the only way to see your development in time. Don't fiddle around with DAW’s function but be concrete, improve your mixing skills and decision making capabilities, then learn to trust them . Give yourself a limited amount of time per mix. A static mix should be 80% done after hours of work. The rest is fine tuning and takes the largest amount of time. Building confidence in rhythmic hearing. Trust your ears for listening for rhythmic precision and keep it natural. A DAW and its graphic interface allow for seeing all you need, but allow to trust your ears not the display. When rhythmic timing is needed, your ears will decide something is early or late, or spot on. Trust your ears. When you are not happy with results, make a copy of your project, remove all insert and send effects and put all panning to center. Start right from the beginning, redefine your stage plan with a clear mixing strategy. Re-setting levels, pans, EQ, to zero and start from the beginning, removing all effect or plugins. Necessary to obtain a good mix lies in intelligently distributing all events in the three spatial dimensions, width, height and depth.

The Starter Mix.

Basically as we are staying inside dimension 1 and 2. We will explain the dimensions later on, but for a starter mix we only use Fader, Level, Balance, Pan, EQ, Compression and sometimes some more tools alike Gate, Limiter. Our main goal is togetherness, but as a contradictive we will explain why we need to separate first. As a starter mix will start off good, only when we first separate the bad from the good. Rushing towards togetherness is never doing any good, so this comes second in line. To understand what we must do (our goal for starter mixes) we need to explain the stage and the three dimensions now.

Panning Laws.